regularization machine learning meaning

For understanding the concept of regularization and its link with Machine Learning we first need to understand why do we need regularization. Regularization in Machine Learning is an important concept and it solves the overfitting problem.

Crash Blossom Machine Learning Glossary Machine Learning Machine Learning Methods Data Science

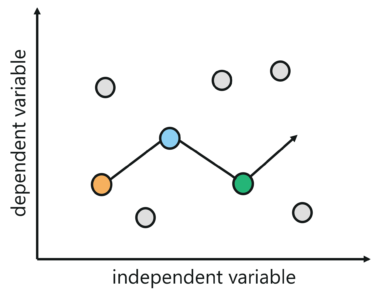

A simple relation for linear regression looks like this.

. Regularization can be applied to objective functions in ill-posed optimization problems. I have covered the entire concept in two parts. In reality optimization is lot more profound in usage.

Ave CodersIn Part 2 of the Necessary Theory for Machine Learning we will look at Hyperparameters what do they do and how are they different from parameter. Regularization is a technique which is used to solve the overfitting problem of the machine learning models. In simple terms regularization is a technique that takes all the features into account but limits the effect of those features on the models output.

Regularization is a form of regression that regularizes or shrinks the coefficient estimates towards zero. This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero. In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero.

It means the model is not able to. Moving on with this article on Regularization in Machine Learning. Regularization is the method.

It is one of the key concepts in Machine learning as it helps choose a simple model rather than a complex one. It is not a complicated technique and it simplifies the machine learning process. In machine learning regularization is a procedure that shrinks the co-efficient towards zero.

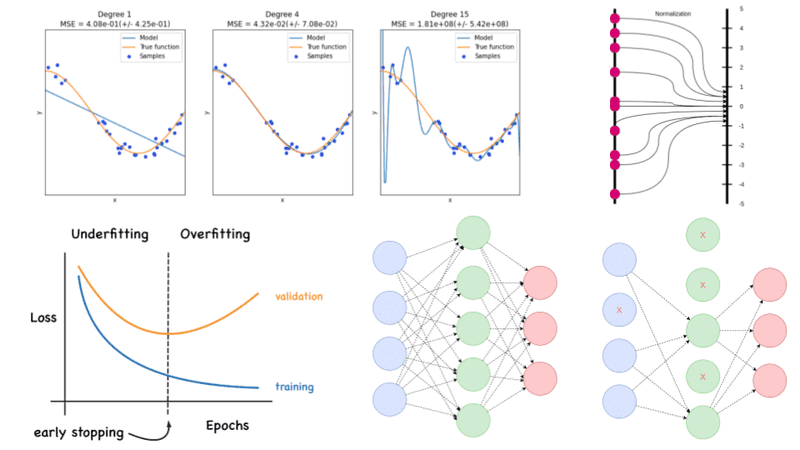

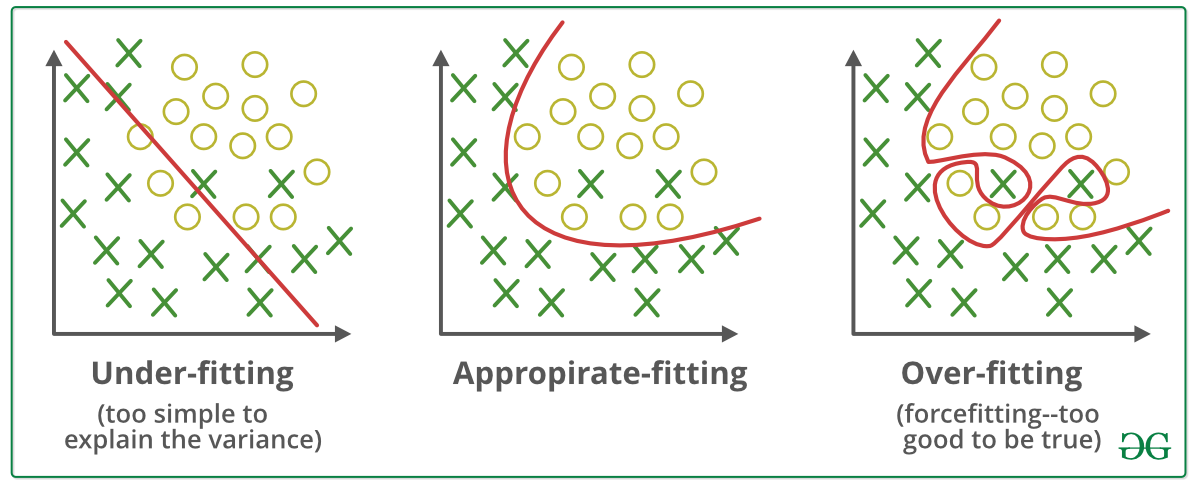

The major concern while training your neural network or any machine learning model is to avoid overfitting. In simple words regularization discourages learning a more complex or flexible model to prevent overfitting. Sometimes one resource is not enough to get you a good understanding of a concept.

It is seen as a part of artificial intelligenceMachine learning algorithms build a model based on sample data known as training data in order to make predictions or decisions without being explicitly programmed to do so. Part 2 will explain the part of what is regularization and some proofs related to it. Machine Learning Model Evaluation Regularization Lei Li leilics UCSB Acknowledgement.

As seen above we want our model to perform well both on the train and the new unseen data meaning the model must have the ability to be generalized. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting. Then we have two terms.

This is where regularization comes into the picture which shrinks or regularizes these learned estimates towards zero by adding a loss function with optimizing parameters to make a model that can predict the accurate value of Y. By the word unknown it means the data which the model has not seen yet. Regularization is a concept by which machine learning algorithms can be prevented from overfitting a dataset.

Part 1 deals with the theory regarding why the regularization came into picture and why we need it. In mathematics statistics finance computer science particularly in machine learning and inverse problems regularization is the process of adding information in order to solve an ill-posed problem or to prevent overfitting. Regularization in Machine Learning.

Also it enhances the performance of models for new inputs. When someone wants to model a problem lets say trying to predic. This technique discourages learning a.

Machine learning ML is the study of computer algorithms that can improve automatically through experience and by the use of data. Setting up a machine-learning model is not just about feeding the data. We all know Machine learning is about training a model with relevant data and using the model to predict unknown data.

Regularization achieves this by introducing a penalizing term in the cost function which assigns a higher penalty to complex curves. Regularization is one of the most important concepts of machine learning. It is very important to understand regularization to train a good model.

When you are training your model through machine learning with the help of artificial neural networks you will encounter numerous problems. Slides borrowed from Bhiksha Rajs 11485 and Mu Li Alex Smolas 157 courses on Deep Learning with modification 1. Regularization is essential in machine and deep learning.

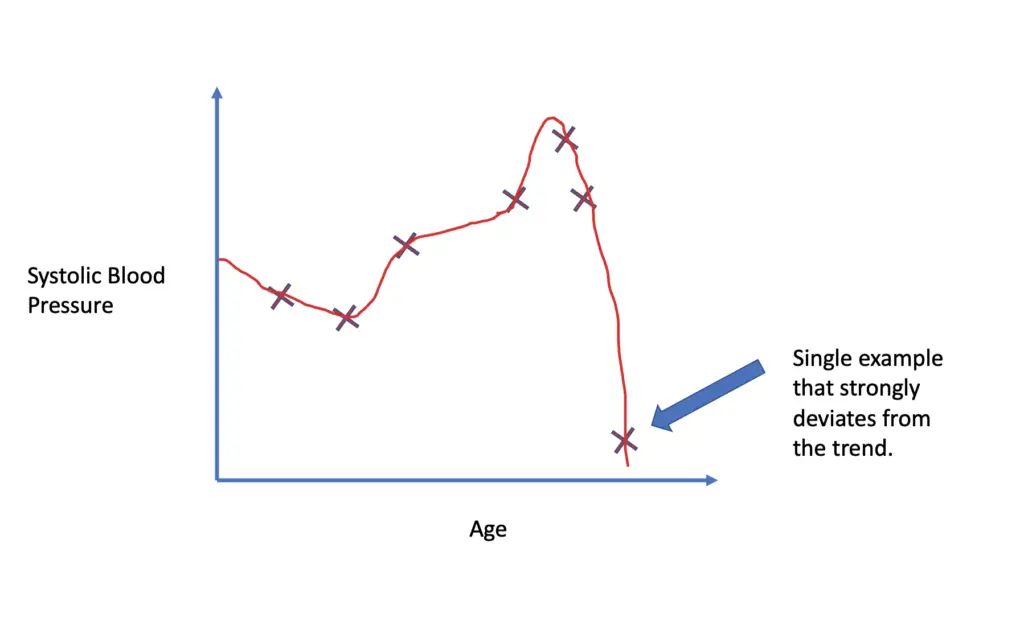

Regularization is an application of Occams Razor. Overfitting is a phenomenon which occurs when a model learns the detail and noise in the training data to an extent that it negatively impacts the performance of the model on new data. In other terms regularization means the discouragement of learning a more complex or more flexible machine learning model to prevent overfitting.

The regularization term or penalty imposes a cost on the optimization. It is a technique to prevent the model from overfitting by adding extra information to it. Sometimes the machine learning model performs well with the training data but does not perform well with the test data.

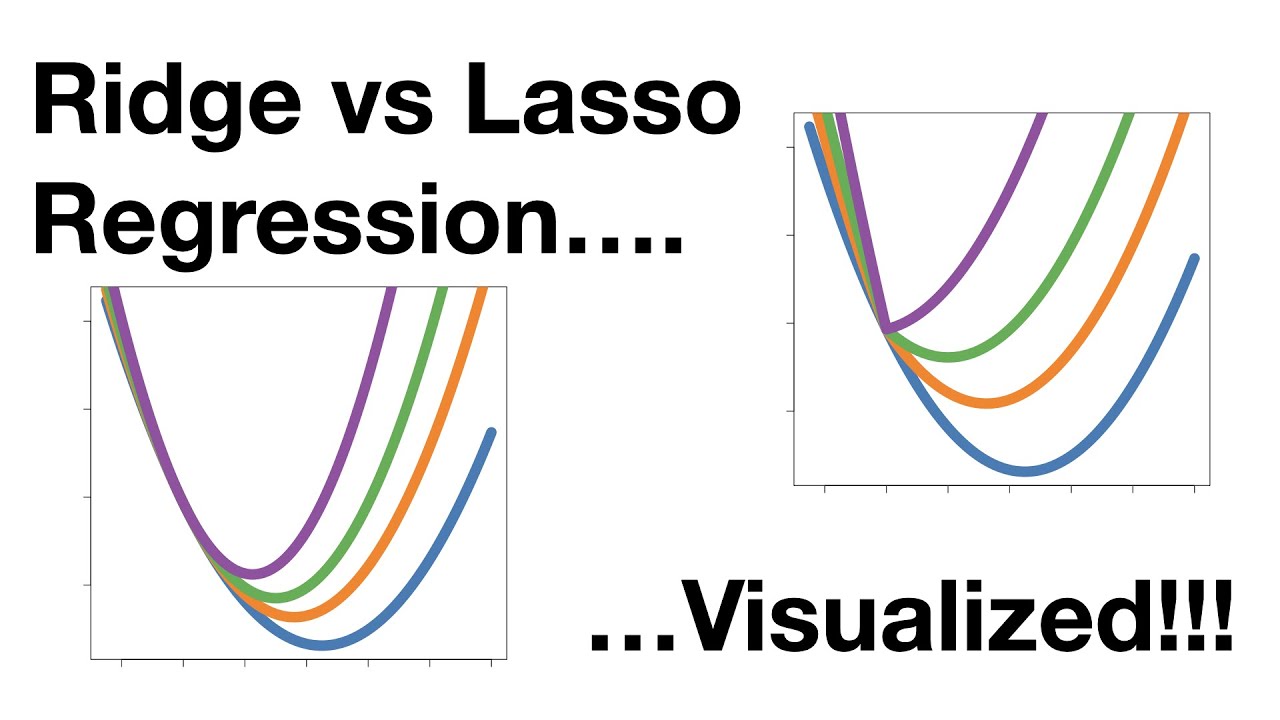

There are essentially two types of regularization techniques-L1 Regularization or LASSO regression. Regularization is one of the basic and most important concept in the world of Machine Learning. Mainly there are two types of regularization techniques which are given below.

Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting. It is also considered a process of adding more information to resolve a complex issue and avoid over-fitting. In general machine learning sense it is solving an objective function to perform maximum or minimum evaluation.

Overfitting is a phenomenon that occurs when a Machine Learning model is constraint to training set and not able to perform well on unseen data. Let us understand this through an example. Regularization in Machine Learning What is Regularization.

Regularization is a technique used in an attempt to solve the overfitting 1 problem in statistical models First of all I want to clarify how this problem of overfitting arises. I have learnt regularization from different sources and I feel learning from different sources is very. Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small.

What Is Regularization In Machine Learning Techniques Methods

Regularization In Machine Learning

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

Regularization In Machine Learning Regularization In Java Edureka

Regularization In Machine Learning

Machine Learning For Humans Part 5 Reinforcement Learning Machine Learning Q Learning Learning

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

Regularization Techniques For Training Deep Neural Networks Ai Summer

What Is Regularization In Machine Learning Quora

Regularization In Machine Learning Regularization In Java Edureka

Understanding Regularization In Machine Learning By Ashu Prasad Towards Data Science

Regularization In Machine Learning Geeksforgeeks

Regularization Techniques In Deep Learning Kaggle

What Is Regularization In Machine Learning

What Are L1 L2 And Elastic Net Regularization In Neural Networks Machinecurve